Deleting Fast and Safe in ServiceNow

One of my first articles, Deleting Fast in ServiceNow, is my most popular and controversial, and for good reason. This is the last of my series on following up on my most popular articles, at least for now!

In summary of my prior article, I evaluated different delete options in ServiceNow to evaluate which was the fastest to delete records of the APIs available. I found that GlideRecord deleteMultiple running from a workflow had the best execution time overall.

The Controversy

I slammed the GlideRecord deleteRecord method pretty hard, since it was over 1,000 times slower, but I didn’t really unpack the need to sometimes not fire business rules, notifications, and workflows while deleting. The deleteMultiple option does trigger business rules by default, and all the above, however, the method setWorkflow(false) does actually work with deleteMultiple as well!

That being said, it’s typically safer to disable any OnDelete notifications, business rules and then run deleteMultiple. You may also want to consider turning off audit delete as well beforehand, otherwise you’ll have to clean up the audit table records with deleteMultiple again (unless you want the safety net).

The method deleteRecord still has it’s place when you want to delete a single, or less than a handful of records, and in some ways it can be a bit safer since it is slower to delete.

Best Practice

Before doing any mass deleting, I would strongly recommend to read ServiceNow’s KB0717791 on Mass Deletion recommendations. There is also a good resource, called Safety Tips for writing Background Scripts, which covers a lot of the common mistakes people make while doing things like deleting. If you are deleting medium to small datasets, it actually isn’t a bad idea to run it as a background script, since a rollback context is generated, which allows you to restore the data.

Exploring More

As promised I looked into some additional factors that could have a play with deleting. Namely Auditing, big fields with data, and a baseline deletion for reference.

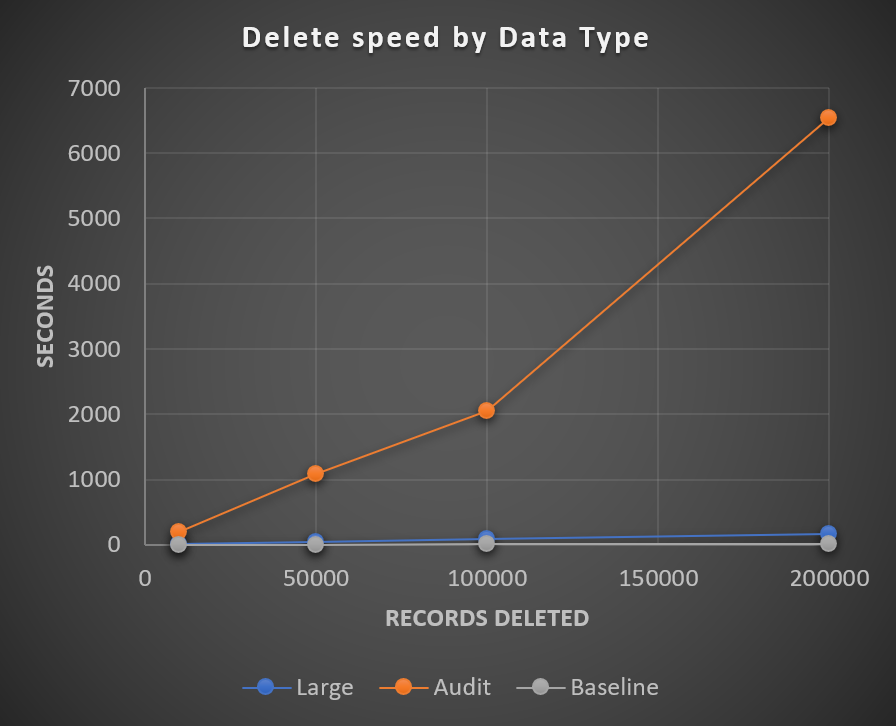

A comparison that shows delete speed by different types of records.

Auditing Impact

For testing auditing, I had 3 small string fields similar to the baseline and just enabled table level auditing. Unsuspectingly, turning on auditing on a table drastically reduces the delete operation speed, as it has to check cascade reference rules, back up a copy of the record onto the audit table, etc. It’s almost surprising though how this impact is very linear. The deletion time is increased .03s per record processed. Goes back to show how important it is to minimize auditing unless it is absolutely necessary.

Big Fields Impact

For testing big fields, I added 3 large 4000 character limit string fields, and populated them with random data. The impact is noticeable, taking 150s longer to delete 200k records than the baseline, but overall, the linear rate increase is .0008s per record processed. From my research, this seems to boil down to the buffer pool size, which the data is cached in case of an undo while it is being deleted.

Baseline Comparison

My baseline table only had 3 small string fields, with no auditing. It took <1s to delete 10k records, and less than 10s to delete 200k records - which leaves the base speed for record deletes to happen around 1 record every 0.00005s. Mind blazingly fast! So if you want to reduce delete speed, it has more to do with the data size and options (auditing) than the count.

Advanced Deleting - Time Slice Method

Wanted to throw in a strong mention about how some tables in ServiceNow, namely sys_audit which are notorious for being large and sharded have to be handled special when it comes to deleting (and other DB operations). There is a known technique where you would step day by day, and sometimes hour by hour to delete all the records within that timeframe. This method takes advantage if the data is indexed by time/created on, and sharded/broken up by time. This way you are strategically retrieving and accessing data in sequence, and removing it surgically. I could probably write a full article on the algorithm - feel free to comment if interested!

Parting thoughts

It’s good to be curious, and see how far we can push the needle. I wanted to leave with the fact that there are even more aspects to explore.

Indexing - Typically after data is deleted, the indexing data is not automatically re-processed. This sometimes can lead to the index portion being bigger than the actual table.

Table growth monitoring is a good practice. There is a self service catalog item in ServiceNow Support site to pull the top 20 or more tables on your instance. This is a good thing to check regularly. There is also a technology ServiceNow might be releasing more widespread in the future called Instance Observer which has some capabilities for table growth monitoring.

MariaDB explains the complexities of big deletes on this KB page, https://mariadb.com/kb/en/big-deletes/. While I think this is really good info, a lot of it does boil down to what options and decisions ServiceNow made in terms of their database configuration, to really let you optimize deletes to the max. Some answers we may never know (unless you work for ServiceNow).